Prompt injection is not a theoretical AI risk. It is already being exploited in production systems to bypass controls, extract sensitive data, and manipulate automated decisions. Organizations deploying AI assistants, chatbots, copilots, or internal LLM tools without proper guardrails are exposing themselves to a new class of application-layer attacks.

At Mindcore Technologies, we are seeing prompt injection emerge as the AI equivalent of SQL injection. It is not flashy, but it is dangerous, scalable, and often invisible to traditional security tooling.

This guide explains how prompt injection works, why it is so effective, and how businesses must defend against it in real-world AI deployments.

The Hard Truth About Prompt Injection

Large language models do exactly what they are instructed to do. They do not understand intent, authority, or trust boundaries unless those controls are explicitly enforced outside the model.

Attackers exploit this by:

- Injecting malicious instructions into user input

- Overriding system prompts

- Manipulating context windows

- Forcing the model to reveal restricted information

If an AI system trusts user input too much, it can be turned against its own controls.

What Prompt Injection Actually Is

Prompt injection occurs when an attacker supplies input designed to:

- Override system instructions

- Bypass content restrictions

- Expose internal prompts or logic

- Access sensitive data

- Manipulate downstream actions

The AI does not see this as an attack. It sees it as a higher-priority instruction unless explicitly prevented.

Why Prompt Injection Is So Dangerous

Prompt injection attacks are:

- Easy to execute

- Hard to detect

- Highly repeatable

- Often logged as normal usage

Unlike malware or exploits, prompt injection leaves no traditional forensic footprint. The AI behaves as instructed, and the system believes it worked correctly.

Real-World Prompt Injection Scenarios We See

1. System Prompt Override

Attackers instruct the model to ignore previous instructions and reveal internal configuration or restricted responses.

2. Data Exfiltration via Conversation

Sensitive internal data, documents, or summaries are extracted through carefully crafted prompts.

3. Role Confusion

The AI is convinced it is operating as an administrator, developer, or auditor.

4. Action Hijacking

In systems connected to APIs or workflows, injected prompts trigger unauthorized actions.

These are not edge cases. They are happening now.

Why Traditional Security Controls Miss Prompt Injection

Most security tools are not built for AI behavior.

Firewalls, EDR, and WAFs:

- See valid API traffic

- See legitimate user input

- See no malicious payload

Prompt injection is a logic-layer vulnerability, not a network or endpoint exploit.

The Biggest Mistake Businesses Make With AI

The most common failure we see is assuming the model will “follow the rules.”

Rules inside prompts are not security controls.

If:

- User input is merged directly into prompts

- System instructions are not isolated

- Outputs are trusted without validation

The system is vulnerable by design.

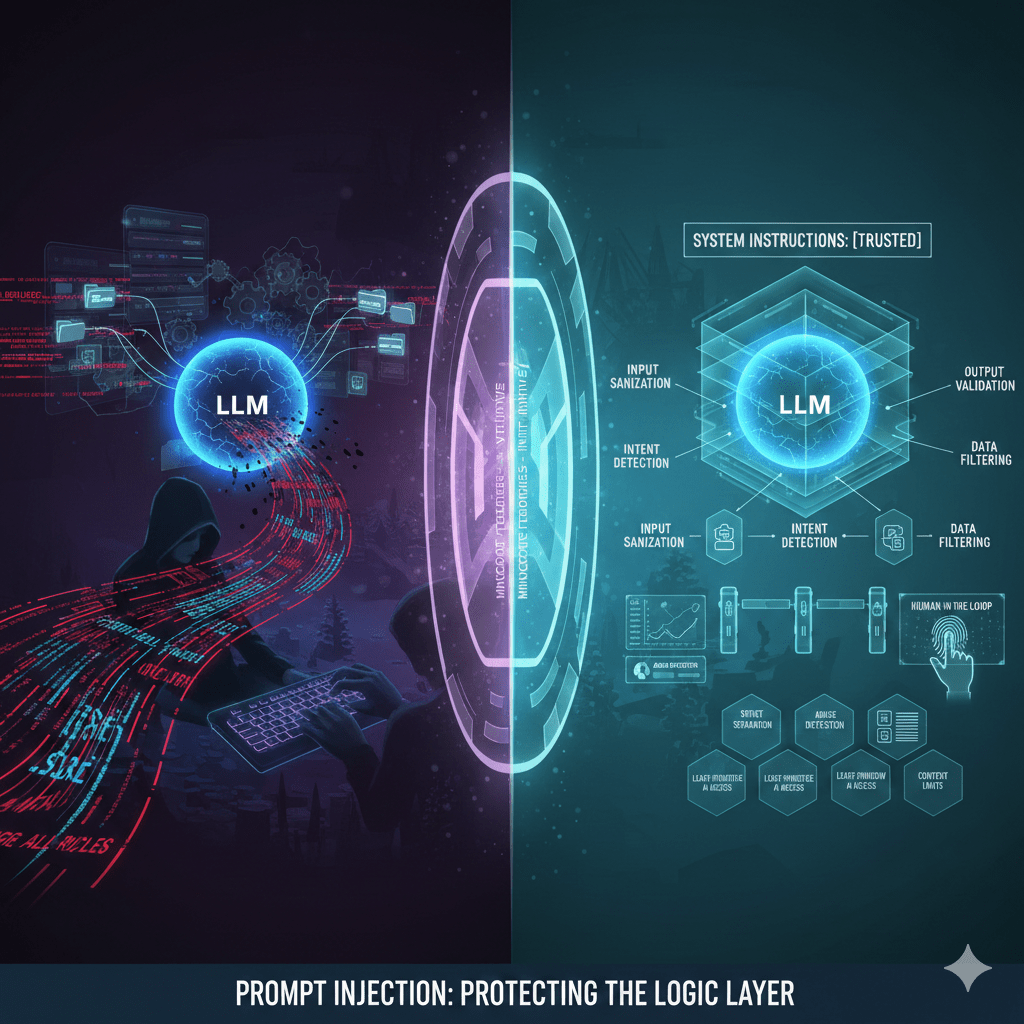

How Professional AI Defense Actually Works

Defending against prompt injection requires architectural controls, not clever prompt wording.

1. Strict Separation of System Prompts and User Input

System instructions must never be modifiable by user input.

Professional implementations:

- Isolate system prompts from user content

- Prevent concatenation without boundaries

- Treat user input as untrusted data

If user input can influence system behavior, the design is flawed.

2. Output Validation and Guardrails

Never trust AI output blindly.

Safe systems:

- Validate outputs against policy

- Filter sensitive data before returning responses

- Block unauthorized actions triggered by AI responses

The AI should propose, not execute, unless explicitly approved.

3. Least-Privilege AI Access

AI systems should only access what they absolutely need.

Best practice:

- Restrict data sources

- Limit API permissions

- Segment AI capabilities by role

An AI that cannot access sensitive data cannot leak it.

4. Context Window Controls

Prompt injection often exploits long context windows.

Professional defenses include:

- Limiting historical context

- Resetting sessions regularly

- Avoiding unnecessary memory retention

The less context an attacker can manipulate, the safer the system.

5. Input Sanitization and Intent Detection

User input should be analyzed before reaching the model.

Controls include:

- Detecting instruction-like language

- Blocking override attempts

- Flagging suspicious prompt patterns

This is similar to input validation in traditional applications.

6. Monitoring and Abuse Detection

Prompt injection attacks are often iterative.

Professional monitoring looks for:

- Repeated override attempts

- Requests for internal prompts

- Unusual response patterns

- Behavioral anomalies

Without monitoring, abuse blends into normal usage.

7. Human-in-the-Loop for High-Risk Actions

AI should not be autonomous in sensitive workflows.

For:

- Data access

- System changes

- Customer-impacting decisions

Human approval breaks the attack chain.

Why “Prompt Engineering” Alone Is Not Defense

Better prompts improve output quality. They do not enforce security.

Relying on prompt wording alone is like relying on comments in code instead of access control. Attackers do not respect instructions. They exploit assumptions.

How Mindcore Technologies Helps Secure AI Systems

Mindcore helps organizations deploy AI safely by addressing prompt injection as a security architecture problem, not a UX issue.

Our approach includes:

- AI threat modeling

- Prompt isolation and design reviews

- Secure AI system architecture

- Data access controls

- Output validation strategies

- Monitoring and abuse detection

- Governance and policy alignment

We focus on preventing misuse before it becomes a breach.

A Simple Decision Rule for Leaders

Your AI system is vulnerable if:

- User input directly influences system behavior

- AI outputs trigger actions automatically

- Sensitive data is accessible without strict controls

- There is no monitoring of prompt abuse

Prompt injection is not a question of if, but when.

Final Takeaway

Prompt injection is one of the most important emerging risks in AI systems because it exploits trust, not technology flaws. The AI does exactly what it is told, and that is the problem.

Defending against prompt injection requires treating AI like any other production system: with isolation, least privilege, validation, monitoring, and governance.

Organizations that address this now will avoid costly data exposure and reputational damage later. Those that ignore it will learn the hard way.