AI gives cybersecurity teams unprecedented visibility, speed, and scale. It can detect threats faster than humans, correlate massive data sets, and automate response in ways that were impossible just a few years ago. That same power, however, introduces ethical risk when security gains come at the expense of privacy, transparency, or user trust.

At Mindcore Technologies, we see ethics not as a philosophical debate, but as a practical security requirement. Poor ethical decisions around AI inevitably become security incidents, compliance failures, or reputational damage.

This article explains the real ethical tensions in AI-driven cybersecurity and how organizations can balance protection with privacy responsibly.

Why Ethics Suddenly Matter More in Cybersecurity

Cybersecurity has always involved trade-offs. What has changed is scale and intimacy.

AI-driven security systems can:

- Monitor user behavior continuously

- Analyze communications, files, and activity patterns

- Infer intent, risk, and anomalies automatically

This level of insight blurs the line between protecting systems and surveilling people.

Ethical failures happen when organizations do not define that boundary clearly.

The Core Ethical Tension: Visibility vs Privacy

Effective cybersecurity requires visibility.

Ethical cybersecurity requires restraint.

AI increases visibility by:

- Collecting more data

- Retaining it longer

- Correlating it more deeply

Without limits, that visibility becomes intrusive and ungoverned.

The ethical challenge is not whether to use AI, but how much data is truly necessary to achieve security outcomes.

Where Ethical Problems Commonly Appear

1. Over-Collection of Data

AI systems are often fed “everything” because it is easier than deciding what is necessary.

Ethical issue:

- Collecting data that exceeds security needs

- Violating data minimization principles

Security should justify data access, not convenience.

2. Lack of Transparency

Users often have no idea:

- What data AI security systems collect

- How decisions are made

- What behaviors trigger alerts

Opaque systems erode trust and create internal resistance.

3. Automated Decisions Without Accountability

AI may:

- Flag users as risky

- Trigger investigations

- Restrict access

When these actions occur without human review, accountability disappears.

4. Bias and False Positives

AI models learn from data.

If that data is flawed:

- Certain users may be unfairly flagged

- Legitimate behavior may be treated as malicious

- Disciplinary actions may be triggered incorrectly

Ethical cybersecurity requires safeguards against automated bias.

5. Secondary Use of Security Data

Security telemetry is sometimes reused for:

- Productivity monitoring

- HR decision-making

- Performance evaluation

This repurposing crosses an ethical line and damages trust.

Why “Security at Any Cost” Backfires

Organizations that ignore ethics in AI security face predictable outcomes:

- Employee distrust

- Shadow IT and workarounds

- Legal and compliance exposure

- Public backlash after incidents

Security that undermines trust ultimately weakens itself.

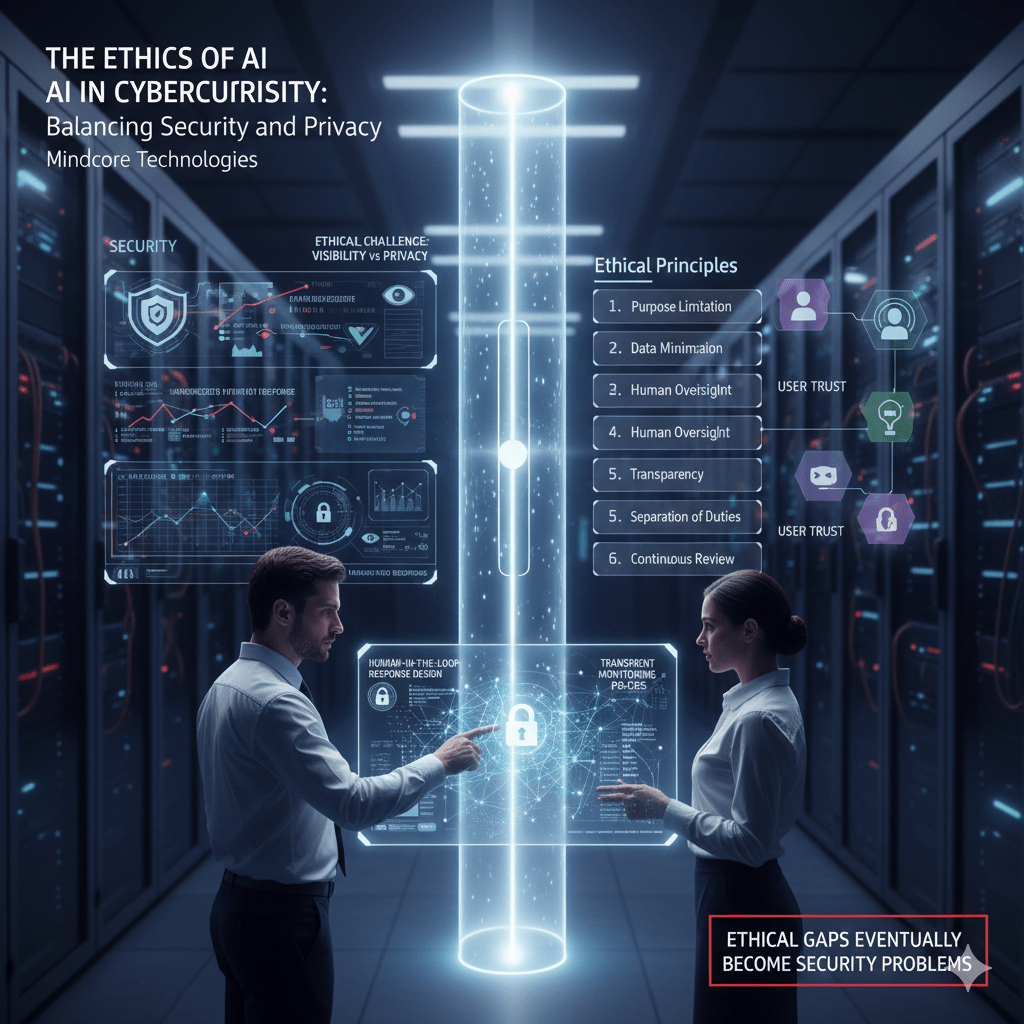

Ethical Principles That Actually Work in Practice

Balancing security and privacy requires explicit principles, not vague intentions.

1. Purpose Limitation

AI security systems should collect data only for security purposes.

If data does not directly support:

- Threat detection

- Incident response

- Risk reduction

It should not be collected.

2. Data Minimization by Design

More data does not always mean better security.

Ethical AI design:

- Limits data scope

- Reduces retention periods

- Avoids unnecessary content inspection

Less data reduces both privacy risk and breach impact.

3. Human Oversight for High-Impact Decisions

AI should assist, not judge.

Best practice:

- AI flags risk

- Humans review context

- Decisions are documented

This preserves accountability and fairness.

4. Transparency With Stakeholders

Users should understand:

- What is monitored

- Why it is monitored

- How data is protected

Transparency builds trust and reduces resistance.

5. Clear Separation Between Security and Surveillance

Security data should never quietly become a monitoring tool for:

- Productivity

- Behavior enforcement

- Non-security objectives

Ethical boundaries must be enforced organizationally, not just technically.

6. Continuous Review and Adjustment

Ethical risk changes as systems evolve.

Organizations must:

- Review AI behavior regularly

- Reassess data access

- Adjust controls as threats and norms change

Ethics is not a one-time decision.

Regulatory Pressure Reflects Ethical Expectations

Regulators increasingly focus on:

- Proportionality

- Transparency

- Accountability

- Explainability

Ethical AI practices align closely with compliance requirements. Ignoring ethics increases regulatory exposure.

The Business Value of Ethical AI in Cybersecurity

Ethical implementation delivers tangible benefits:

- Stronger employee trust

- Better cooperation during incidents

- Reduced legal risk

- More sustainable security programs

Ethics is not a brake on security. It is a stabilizer.

How Mindcore Technologies Approaches Ethical AI Security

At Mindcore Technologies, we help organizations deploy AI-driven cybersecurity with ethics built in, not bolted on.

Our approach includes:

- Privacy-first security architecture

- Data minimization and access controls

- Human-in-the-loop response design

- Transparent monitoring policies

- Governance and accountability frameworks

- Ongoing review and tuning

We believe security that respects privacy is stronger, not weaker.

A Simple Ethical Readiness Check

Your AI security program needs review if:

- Users do not understand what is monitored

- AI decisions occur without human review

- Security data is reused for non-security purposes

- Data collection is broader than necessary

Ethical gaps eventually become security problems.

Final Takeaway

AI gives cybersecurity teams immense power. Ethics determine whether that power protects or harms the organization.

Balancing security and privacy is not about choosing one over the other. It is about designing systems that achieve security goals without exceeding what is necessary, justified, or defensible.

Organizations that take this balance seriously will build durable, trusted, and effective security programs. Those that ignore it will face resistance, risk, and eventual correction under pressure.