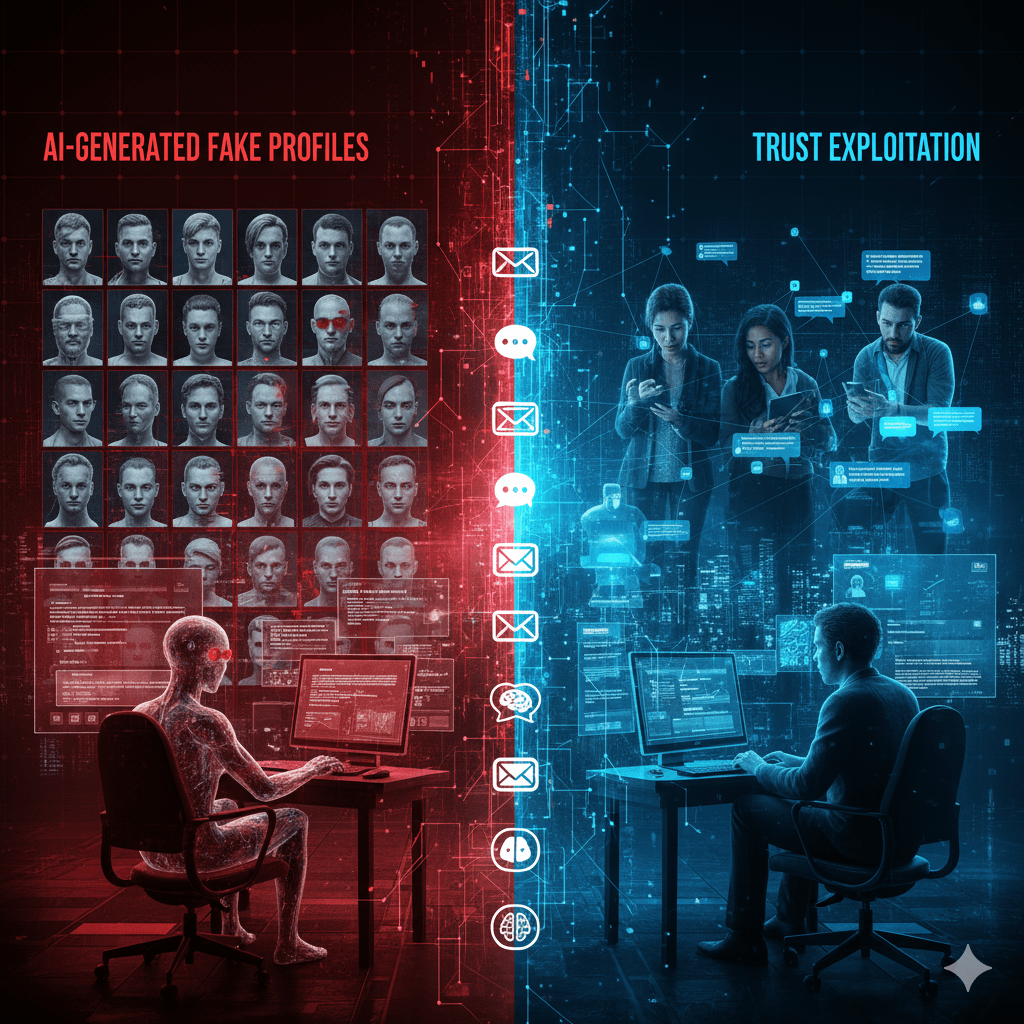

Social engineering did not become more common. It became more believable. AI has removed the friction that once exposed scams, sloppy grammar, inconsistent stories, and limited scale. Today’s attacks are personalized, persistent, and indistinguishable from legitimate human interaction.

At Mindcore Technologies, we treat AI-driven social engineering as an identity and trust exploitation problem, not an email problem. Fake people are now a scalable attack surface.

This article explains how cybercriminals use AI-generated profiles and content, why traditional awareness training fails, and the exact controls organizations must deploy to stay ahead.

Why AI Changed Social Engineering Permanently

Before AI, social engineering was constrained by human effort.

Now attackers can:

- Generate thousands of realistic personas

- Maintain consistent backstories indefinitely

- Tailor language, tone, and timing per target

- Operate across email, SMS, chat, and social platforms simultaneously

The result is sustained, believable manipulation at machine scale.

What AI-Generated Social Engineering Looks Like Today

1. Fully Synthetic Online Identities

Attackers create profiles with:

- AI-generated headshots

- Consistent employment histories

- Realistic posting behavior

- Long-term interaction patterns

These accounts age naturally, build credibility, and wait.

2. Executive and Peer Impersonation

AI models replicate:

- Writing style

- Vocabulary

- Urgency patterns

- Contextual knowledge

Messages sound exactly like a CEO, vendor, or colleague because they are trained on real communication.

3. Automated Relationship Building

AI bots:

- Engage targets over weeks or months

- Respond naturally to questions

- Adjust tone based on engagement

- Build rapport before asking anything risky

This defeats “spot the red flag” training.

4. Context-Aware Phishing and Pretexting

AI references:

- Current projects

- Real vendors

- Industry events

- Internal terminology

The message is not generic. It is situational.

5. Multi-Channel Attack Coordination

AI-driven social engineering rarely stays in one channel.

Attackers combine:

- LinkedIn outreach

- Email follow-ups

- SMS urgency

- Voice or video escalation

Trust is built incrementally across platforms.

Why Traditional Social Engineering Defenses Fail

Most defenses assume:

- Attacks are brief

- Messages are obvious

- Users decide quickly

- One channel is used

AI breaks all four assumptions.

Failure points include:

- Training focused on grammar and links

- Trust in internal-looking requests

- Blind approval of familiar names

- Overconfidence after MFA

When attackers look legitimate, trust becomes the vulnerability.

The Real Objective: Identity Abuse, Not Clicks

Modern social engineering is not about tricking users into clicking links.

It is about:

- Stealing session tokens

- Coercing MFA approvals

- Inducing credential reuse

- Triggering authorized actions

Once identity is abused, everything downstream appears legitimate.

How AI-Generated Content Bypasses Human Intuition

Humans rely on:

- Tone mismatches

- Language errors

- Inconsistencies

AI removes these signals.

Worse, AI adapts based on response. If a user hesitates, the message evolves. If urgency fails, authority is applied. This is behavioral manipulation, not deception.

What Actually Stops AI-Driven Social Engineering

Defense must shift from awareness to control and verification.

1. Make Identity Verifiable, Not Familiar

Trust must be based on verification, not recognition.

Critical controls:

- Phishing-resistant MFA

- Conditional access enforcement

- Device and session validation

- Short-lived sessions

Familiar names should not bypass security.

2. Enforce Out-of-Band Verification for High-Risk Actions

Any request involving:

- Financial changes

- Credential resets

- Access modifications

- Data sharing

Must require secondary verification through a separate channel.

3. Protect Sessions, Not Just Credentials

AI-driven attacks often steal sessions.

Controls must include:

- Session binding to devices

- Continuous authentication

- Token protection and monitoring

MFA alone is not sufficient.

4. Monitor Identity Behavior Continuously

Behavior reveals compromise faster than content.

Watch for:

- Unusual approval patterns

- Abnormal login timing

- Role-inconsistent actions

- Sudden privilege usage

Valid credentials do not equal safe behavior.

5. Harden Collaboration Platforms

Attackers exploit Teams, Slack, and email equally.

Controls must include:

- External user restrictions

- Link and file inspection

- Domain impersonation protection

- Alerting on abnormal messaging behavior

Social engineering lives where collaboration happens.

6. Update Training for AI Reality

Training must reflect modern tactics.

Effective programs teach:

- Long-game manipulation

- Executive impersonation

- Multi-channel pressure

- Session hijacking awareness

- Verification discipline

Training must reduce trust reflexes, not just teach caution.

The Biggest Social Engineering Mistake We See

Organizations still treat social engineering as a user failure.

In reality:

- AI attacks are designed to succeed

- Humans are targeted deliberately

- Systems must compensate for manipulation

Blaming users does not stop attackers.

How Mindcore Technologies Helps Organizations Defend

Mindcore helps organizations counter AI-driven social engineering through:

- Identity-centric security architecture

- Phishing-resistant MFA deployment

- Session and device trust enforcement

- Collaboration platform hardening

- Behavioral monitoring and alerting

- Realistic social engineering readiness programs

We focus on removing attacker leverage, not asking users to be perfect.

A Simple Reality Check for Leadership

You are exposed if:

- Familiar names bypass verification

- MFA approvals are trusted blindly

- Sessions persist indefinitely

- Behavior is not monitored

- Training assumes obvious scams

AI-powered social engineering exploits trust, not ignorance.

Final Takeaway

AI has transformed social engineering into a scalable, believable, and persistent attack model. Fake profiles are no longer fake-looking. Messages are no longer suspicious by tone alone. Trust is the primary exploit.

Organizations that redesign security around identity verification, behavioral monitoring, and controlled actions will reduce risk dramatically. Those that rely on awareness alone will continue to be compromised by attacks that look and feel legitimate.