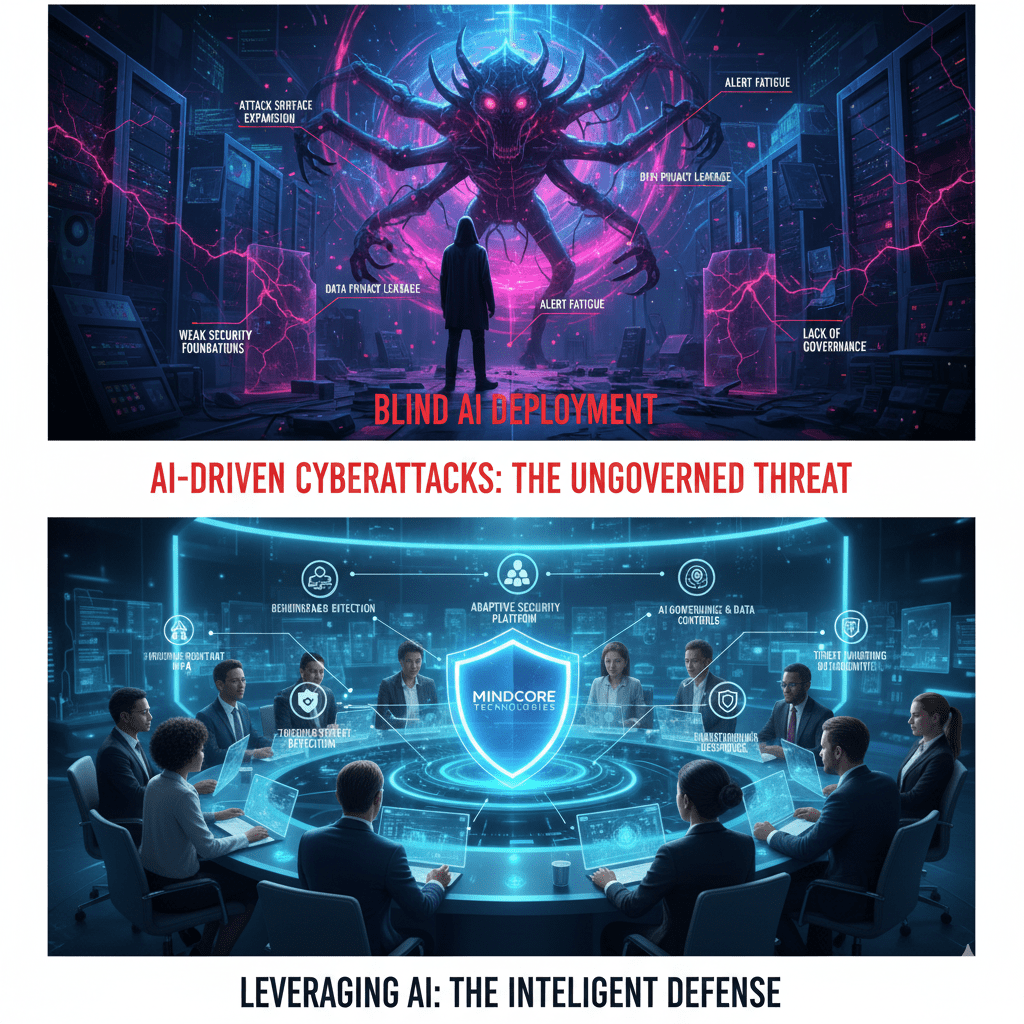

AI is not automatically making organizations more secure. It is making good security teams more effective and weak security programs more dangerous. The difference comes down to how AI is deployed, governed, and integrated into real defensive workflows.

At Mindcore Technologies, we see a growing divide. Some organizations are using AI to reduce detection time, cut noise, and surface real risk faster than ever. Others are deploying AI tools blindly, increasing attack surface, privacy risk, and false confidence.

This article explains how AI is actually transforming cybersecurity, where it provides real defensive advantage, and how businesses should leverage it without creating new vulnerabilities.

The Reality Check: AI Is Not a Security Strategy

AI does not replace fundamentals.

AI amplifies:

- Good visibility

- Clean data

- Strong identity controls

- Mature response processes

If those foundations are weak, AI magnifies the weaknesses.

Organizations that expect AI to “fix security” without fixing architecture are setting themselves up for failure.

Where AI Actually Strengthens Cybersecurity

When applied correctly, AI excels in areas where humans struggle at scale.

1. Faster Threat Detection Through Behavioral Analysis

Traditional security tools rely heavily on:

- Signatures

- Static rules

- Known indicators

AI excels at detecting abnormal behavior, even when there is no known signature.

Effective AI-driven detection identifies:

- Unusual login patterns

- Abnormal data access

- Suspicious process behavior

- Deviations from baseline activity

This shortens the gap between compromise and detection.

2. Reducing Alert Noise and Analyst Fatigue

Security teams are overwhelmed by alerts.

AI helps by:

- Correlating related events

- Suppressing low-risk noise

- Prioritizing high-confidence threats

When implemented properly, AI allows analysts to focus on incidents that actually matter.

3. Improving Phishing and Social Engineering Detection

AI-driven attacks require AI-driven defense.

Modern AI-based email security:

- Detects impersonation patterns

- Analyzes intent, not just content

- Flags abnormal communication behavior

This is critical as phishing becomes more realistic and targeted.

4. Accelerating Incident Response

AI improves response by:

- Automatically gathering context

- Recommending containment actions

- Speeding up investigation timelines

This does not remove humans from the loop. It gives them better information faster.

5. Enhancing Threat Hunting Capabilities

Threat hunting is difficult to scale manually.

AI assists by:

- Identifying subtle anomalies

- Surfacing long-term trends

- Highlighting low-and-slow attacker behavior

This is where AI provides long-term defensive advantage.

Where AI Creates New Security Risk

AI also introduces risk when deployed without controls.

1. AI Expands the Attack Surface

AI systems require:

- Data access

- Integrations

- APIs

- Automation

Each of these becomes a potential attack path if not secured properly.

2. Blind Trust in AI Output

AI output is not always correct or safe.

Common failures include:

- Hallucinated conclusions

- Incomplete analysis

- Overconfidence in recommendations

Security decisions must still be validated by humans.

3. Data Privacy and Leakage Risk

AI systems:

- Ingest sensitive data

- Cache prompts and responses

- Generate derived information

Without strict governance, AI becomes a data exposure vector.

4. Adversarial Use of AI Against Defenders

Attackers also use AI.

They exploit:

- Detection thresholds

- Alert fatigue

- Predictable AI-driven responses

Defensive AI must assume intelligent adversaries.

How to Leverage AI Safely in Cybersecurity

Using AI effectively requires discipline and structure.

1. Anchor AI to Identity and Access Controls

AI insights are only useful if access is controlled.

Strong identity foundations include:

- Phishing-resistant MFA

- Conditional access policies

- Least-privilege enforcement

AI can surface risk, but identity controls stop damage.

2. Treat AI Output as Advisory, Not Authority

AI should inform decisions, not make them unilaterally.

Best practice:

- Human review for high-risk actions

- Clear escalation paths

- No autonomous execution without approval

AI supports analysts. It does not replace accountability.

3. Govern Data Access Rigorously

AI should only see what it needs.

Effective controls include:

- Data classification

- Explicit allowlists

- Separation between sensitive systems and AI tools

If AI cannot access sensitive data, it cannot leak it.

4. Monitor AI Behavior Like Any Other System

AI systems must be monitored.

This includes:

- Usage patterns

- Access behavior

- Output anomalies

- Abuse attempts

AI without monitoring becomes a blind spot.

5. Integrate AI Into Existing Security Operations

AI works best when embedded into:

- SOC workflows

- Incident response processes

- Threat hunting programs

Standalone AI tools without operational integration rarely deliver value.

The Biggest AI Security Mistake Organizations Make

The most common failure we see is AI optimism without governance.

Organizations rush to adopt AI because:

- It promises efficiency

- It looks impressive

- Competitors are using it

Without clear rules, ownership, and oversight, AI becomes technical debt.

How Mindcore Technologies Uses AI to Strengthen Defenses

Mindcore leverages AI as part of a disciplined security strategy, not a shortcut.

Our approach includes:

- AI-assisted behavioral detection

- Identity-centric security design

- AI governance and data controls

- Threat hunting augmentation

- Analyst-driven response workflows

- Continuous monitoring and tuning

We use AI to enhance human judgment, not replace it.

A Simple Decision Framework for Leaders

AI strengthens cybersecurity when:

- Foundations are solid

- Data access is controlled

- Outputs are validated

- Humans remain accountable

AI weakens cybersecurity when:

- It is deployed blindly

- It operates autonomously

- It lacks governance

- It increases exposure

Final Takeaway

AI is transforming cybersecurity, but not by making defenses automatic. It makes them faster, more informed, and more scalable when used correctly.

Organizations that treat AI as a force multiplier for strong fundamentals will gain real advantage. Those that treat AI as a replacement for fundamentals will create new risks they do not fully understand.

The question is not whether to use AI in cybersecurity. The question is whether you are using it deliberately, safely, and with clear accountability.