AI systems do not create new data privacy risks. They amplify existing ones. The difference is scale, speed, and opacity. Data that once sat quietly in databases is now fed into models, shared across tools, logged, cached, summarized, and reused in ways most organizations cannot fully trace.

At Mindcore Technologies, we see the same issue across industries. Companies adopt AI for efficiency, but their data governance model was designed for humans, not machines. That gap is where privacy failures occur.

This article explains how AI changes data privacy risk, why traditional controls are insufficient, and what organizations must do to stay in control.

The Core Privacy Problem With AI

AI systems require access to data to function. The more capable the AI, the more data it consumes.

That creates three unavoidable realities:

- Data moves more frequently

- Data is processed in more places

- Data exposure paths multiply

Privacy risk is no longer tied to a single system. It is tied to how data flows through AI-enabled workflows.

Why AI Makes Privacy Harder, Not Easier

1. Data Is Repurposed Constantly

AI systems reuse data for:

- Training

- Inference

- Context building

- Memory and retrieval

- Analytics

Data collected for one purpose is often reused for another, sometimes without explicit approval or awareness.

2. Visibility Drops as Automation Increases

Traditional privacy controls rely on:

- Access logs

- User permissions

- Clear system boundaries

AI systems blur those boundaries. Data may be:

- Embedded in prompts

- Stored in vector databases

- Cached for performance

- Logged for debugging

Without deliberate design, visibility disappears.

3. Sensitive Data Becomes Indirectly Exposed

AI does not need raw records to leak sensitive information.

It can:

- Summarize confidential content

- Infer private attributes

- Reconstruct sensitive details

- Combine harmless data into sensitive output

Privacy risk is no longer limited to direct access.

Common Privacy Failures We See in AI Deployments

1. Feeding Sensitive Data Into Models Without Controls

Organizations allow AI tools to ingest emails, documents, tickets, or chat logs without proper classification or restrictions.

2. No Clear Boundary Between Internal and External AI

Employees use consumer AI tools with business data, creating uncontrolled third-party exposure.

3. Over-Permissive AI Access

AI systems are given broad access “for convenience,” violating least-privilege principles.

4. Lack of Output Review

AI-generated responses are trusted without validation, even when they expose confidential data.

5. No Data Retention Strategy for AI

Prompts, outputs, embeddings, and logs are stored indefinitely without privacy review.

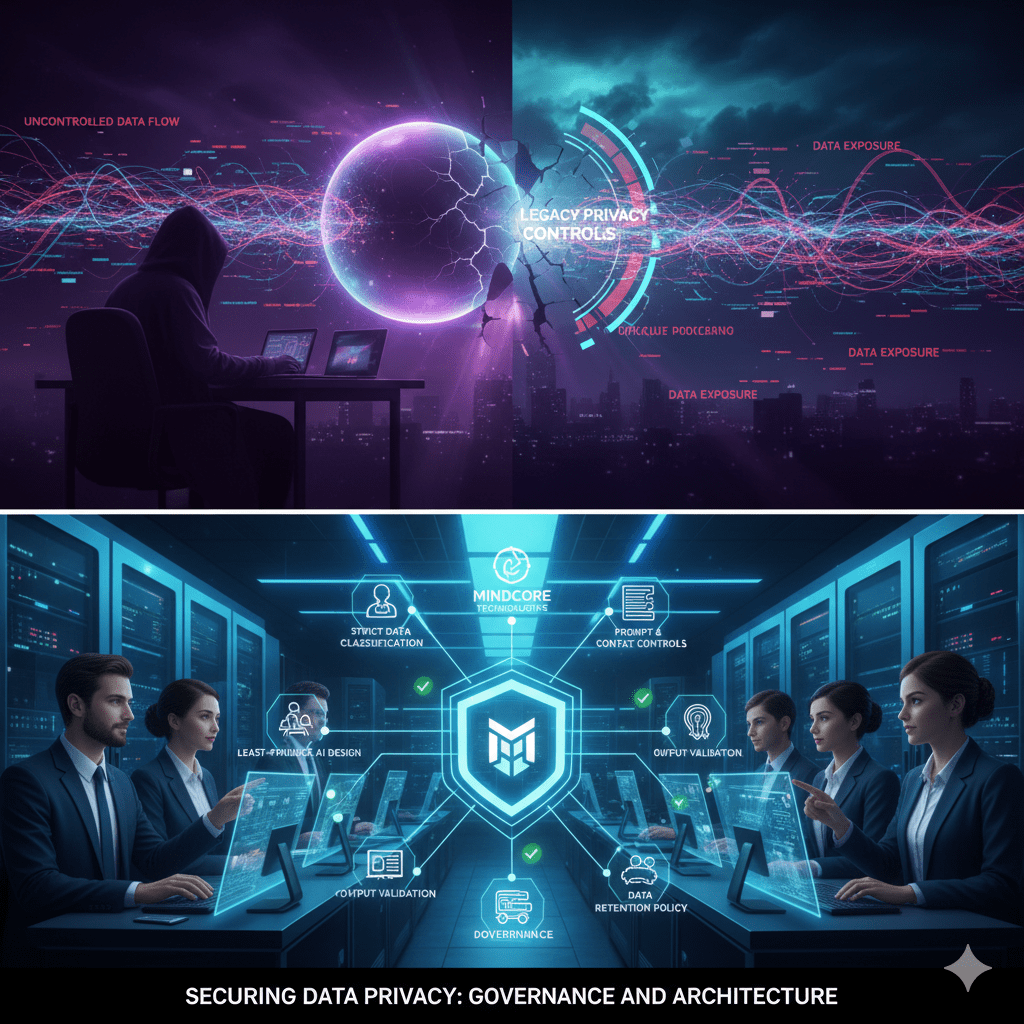

Why Traditional Privacy Controls Fail With AI

Privacy programs were built around:

- Databases

- Applications

- Users

AI introduces:

- Non-deterministic behavior

- Dynamic context building

- Indirect data exposure

Controls designed for static systems cannot manage adaptive ones without redesign.

What Securing Data Privacy Actually Requires in an AI World

Privacy protection must move upstream into architecture and governance.

1. Strict Data Classification Before AI Access

AI should never see data it does not need.

Effective controls include:

- Clear data classification policies

- Explicit allowlists for AI-accessible data

- Blocking sensitive categories by default

If data is not classified, it cannot be protected.

2. Separation Between AI Systems and Source Data

AI should not have unrestricted access to raw systems.

Professional implementations:

- Use controlled data pipelines

- Limit AI access to sanitized datasets

- Avoid direct database connections

Isolation reduces exposure and blast radius.

3. Least-Privilege AI Design

AI systems should operate with minimal permissions.

Best practices include:

- Role-based AI access

- Segmented data sources

- Separate models for different sensitivity levels

Broad access is the enemy of privacy.

4. Prompt and Context Controls

Sensitive data often leaks through prompts.

Controls must include:

- Preventing sensitive data in user input

- Filtering prompts before processing

- Limiting context window retention

Prompt hygiene is a privacy requirement, not a UX concern.

5. Output Validation and Redaction

AI output must be treated as untrusted.

Professional safeguards:

- Detect sensitive data in responses

- Redact or block inappropriate output

- Require approval for high-risk responses

The AI should suggest, not disclose.

6. Clear Retention and Deletion Policies for AI Artifacts

AI creates new data types:

- Prompts

- Outputs

- Embeddings

- Logs

Each requires:

- Defined retention periods

- Secure storage

- Deletion policies aligned with privacy obligations

Ignoring AI artifacts creates silent compliance risk.

7. Governance, Not Just Technology

AI privacy is not a tool problem.

Organizations need:

- AI usage policies

- Approved AI platforms

- Clear accountability

- Regular privacy reviews

Without governance, technical controls degrade over time.

Regulatory Pressure Is Increasing

Privacy regulations do not exempt AI.

Organizations must still comply with:

- Data minimization

- Purpose limitation

- Access controls

- Breach notification requirements

AI systems that cannot explain data handling create regulatory exposure.

The Business Risk of Getting This Wrong

Privacy failures involving AI lead to:

- Regulatory penalties

- Legal exposure

- Loss of customer trust

- Reputational damage

- Forced AI rollbacks

The cost is far higher than doing it correctly upfront.

How Mindcore Technologies Helps Secure AI Data Privacy

Mindcore helps organizations adopt AI without losing control of sensitive data through:

- AI data flow mapping

- Privacy-first AI architecture design

- Data classification and access controls

- Prompt and output governance

- Secure AI platform selection

- Ongoing monitoring and compliance alignment

We treat AI privacy as a security and governance discipline, not an afterthought.

A Simple Reality Check for Leaders

You have AI privacy risk if:

- AI tools access business data without restriction

- Employees use external AI platforms freely

- You cannot trace where AI data is stored

- Outputs are not reviewed or filtered

AI increases capability. It must not increase exposure.

Final Takeaway

Securing data privacy in an AI-driven world is not about slowing innovation. It is about ensuring innovation does not outpace control.

AI systems magnify whatever data practices already exist. Strong governance, least privilege, visibility, and validation turn AI into an advantage. Weak controls turn it into a liability.

Organizations that treat AI privacy as a foundational requirement will scale safely. Those that ignore it will eventually be forced to respond under pressure.