Adversarial AI is not a future risk. It is already being used to probe, evade, and outlearn security controls in real environments. Attackers are no longer guessing how defenses work. They are testing them, learning from them, and adapting in real time.

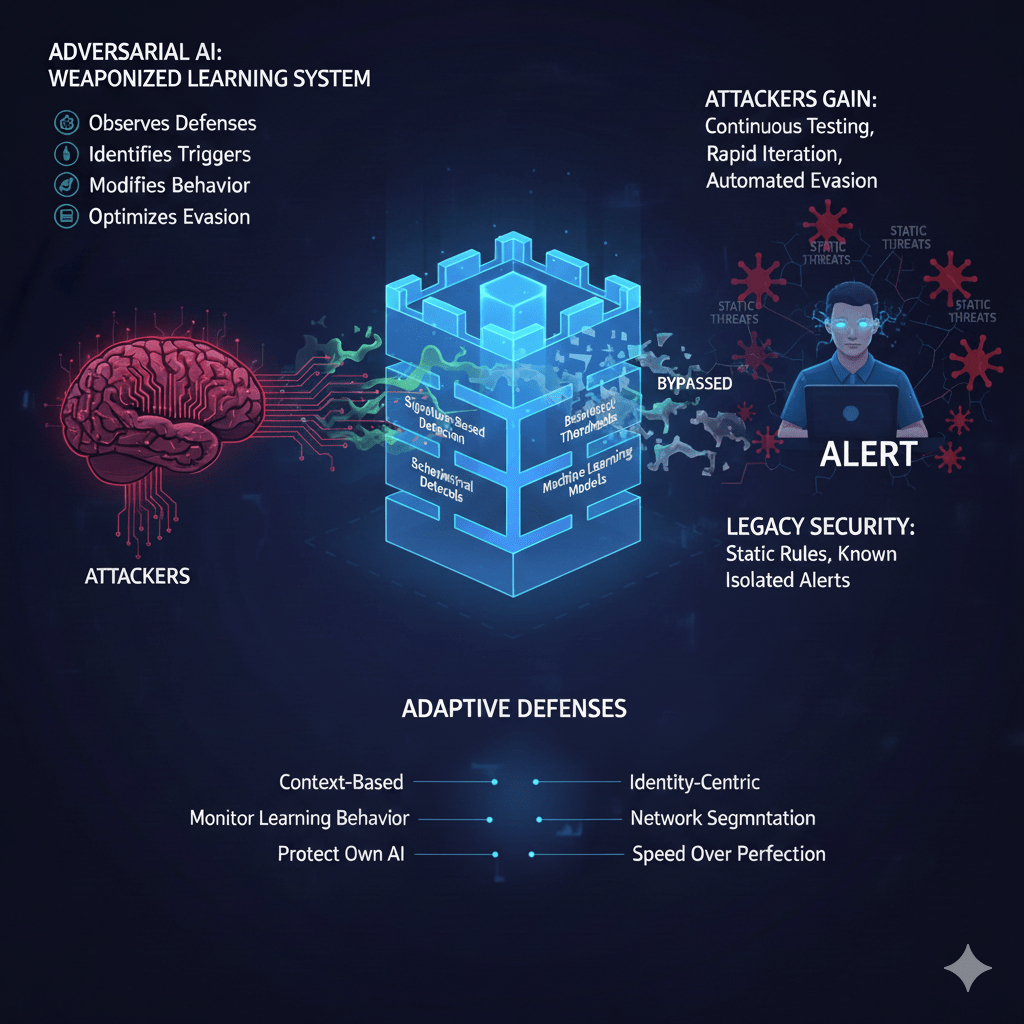

At Mindcore Technologies, we define adversarial AI as a weaponized learning system. It observes your defenses, identifies what triggers alerts, and modifies its behavior until it blends in. Most organizations are still defending against static threats. Adversarial AI punishes that assumption.

This article breaks down how adversarial AI actually works, where it bypasses security today, and how defenders must adapt.

What Adversarial AI Really Means in Cybersecurity

Adversarial AI is not just AI-powered malware.

It refers to systems that:

- Intentionally interact with security controls

- Observe detection outcomes

- Adjust behavior based on results

- Optimize evasion over time

These systems learn how your defenses think.

Why Adversarial AI Favors Attackers

Traditional attacks are limited by:

- Human trial and error

- Time and cost

- Analyst attention

Adversarial AI removes those limits.

Attackers gain:

- Continuous testing at scale

- Rapid iteration

- Automated evasion learning

- Reduced operational risk

Defense teams rarely see the learning process. They only see what finally works.

How Adversarial AI Bypasses Security in Practice

1. Evasion of Signature-Based Detection

Adversarial AI mutates payloads continuously.

It tests:

- Slight code variations

- Timing changes

- Execution order

- Memory usage patterns

Anything that triggers detection is discarded. Only evasive variants persist.

2. Manipulating Behavioral Thresholds

Behavior-based systems rely on thresholds.

Adversarial AI:

- Operates just below alert limits

- Spreads activity across time

- Mimics normal user patterns

This turns “abnormal” behavior into statistically acceptable behavior.

3. Defeating Machine Learning Models

AI models themselves can be attacked.

Techniques include:

- Adversarial inputs that confuse classifiers

- Data poisoning during learning phases

- Triggering false confidence in benign classification

Poorly monitored AI defenses can be trained against themselves.

4. Intelligent Phishing and Social Engineering

Adversarial AI refines human-targeted attacks.

It learns:

- Which messages get responses

- Which tone works for each role

- Which timing avoids suspicion

Humans become the easiest bypass.

5. Identity and Session Abuse

Adversarial AI prefers valid access.

It focuses on:

- Credential reuse testing

- MFA fatigue exploitation

- Session token abuse

- Lateral identity movement

Security tools trust what looks legitimate.

Why Legacy Security Models Fail Against Adversarial AI

Most security programs assume:

- Attacks are static

- Threats repeat known patterns

- Alerts are meaningful on their own

Adversarial AI breaks all three assumptions.

Detection systems that do not learn defensively are eventually mapped and bypassed.

The Most Dangerous Aspect: Silent Learning

Adversarial AI does not need to succeed immediately.

It:

- Probes slowly

- Records failures

- Adjusts quietly

- Strikes only when confident

By the time defenders see activity, the attacker already understands the environment.

How Organizations Must Adapt Defensively

Defending against adversarial AI requires abandoning static thinking.

1. Shift From Thresholds to Context

Static thresholds are predictable.

Defense must consider:

- Identity context

- Behavioral history

- Data sensitivity

- Access justification

Context is harder to game than rules.

2. Make Identity the Primary Control Plane

Adversarial AI exploits trust.

Controls must include:

- Phishing-resistant MFA

- Conditional access

- Short-lived sessions

- Continuous verification

If identity is compromised, everything else follows.

3. Monitor for Learning Behavior

Adversarial AI leaves signals.

Watch for:

- Repeated low-level probing

- Gradual behavior shifts

- Test-and-fail patterns

- Alert threshold exploration

These patterns matter more than individual events.

4. Protect and Monitor Your Own AI Systems

Defensive AI must be defended.

This includes:

- Monitoring model inputs

- Detecting poisoning attempts

- Reviewing outputs for drift

- Restricting training data access

Unprotected AI becomes a liability.

5. Reduce the Value of Adaptation

Even adaptive attackers struggle in constrained environments.

Key strategies:

- Network segmentation

- Least-privilege access

- Limited blast radius

- Rapid isolation

Adaptation loses power when movement is restricted.

6. Emphasize Speed Over Perfection

You cannot prevent all AI-driven attacks.

You must:

- Detect earlier

- Contain faster

- Recover decisively

Time is the real advantage.

The Biggest Defensive Mistake We See

Organizations focus on improving detection accuracy instead of reducing exploitability.

If attackers can:

- Move laterally

- Abuse identity

- Access sensitive data easily

Adversarial AI will eventually succeed.

How Mindcore Technologies Defends Against Adversarial AI

Mindcore helps organizations stay ahead of adversarial AI through:

- Identity-centric security architecture

- Behavior-driven detection

- Endpoint and session protection

- AI system governance and monitoring

- Zero Trust segmentation

- Rapid containment and response design

We design defenses that assume attackers are learning.

A Simple Readiness Check

You are vulnerable to adversarial AI if:

- Detection relies on static rules

- Identity trust persists after login

- AI systems are not monitored

- Lateral movement is easy

- Low-level probing is ignored

Adversarial AI exploits predictability.

Final Takeaway

Adversarial AI represents a shift from attacking systems to learning systems. Cybercriminals are no longer guessing. They are observing, adapting, and bypassing defenses methodically.

Organizations that treat security as static will fall behind. Those that build adaptive, identity-driven, and context-aware defenses will remain resilient even as attackers evolve.