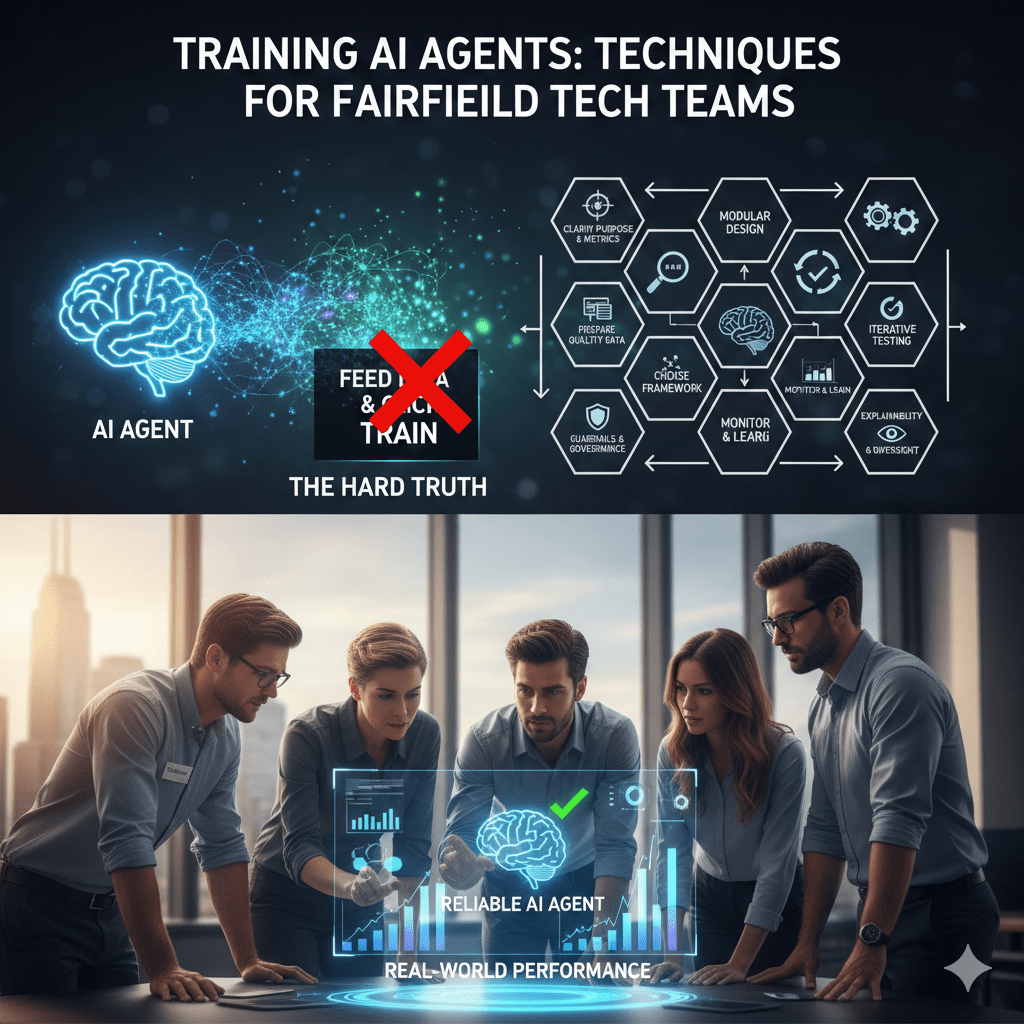

If your tech team thinks training AI agents is just “feeding data into a model and clicking train,” you are underestimating the engineering, governance, and measurement required for real-world performance. AI agents aren’t static scripts — they learn, make decisions, interact with systems, and act autonomously. Training them effectively requires a structured process, clear objectives, quality data, ongoing evaluation, and governance. Training without context, metrics, or guardrails leads to unreliable agents that fail in production, respond unpredictably, or produce biased outcomes.

For Fairfield tech teams to succeed with AI agents, you must treat training as strategic development, not a checkbox exercise.

1. Clarify Purpose and Success Metrics

Begin by defining what the agent is intended to do and how success will be measured:

- What task will the agent perform?

- What decisions should it make autonomously?

- What KPIs define acceptable performance?

Clear objectives reduce ambiguity and shape your training data and evaluation strategy.

2. Prepare High-Quality, Relevant Data

AI agents learn from examples — quality matters more than quantity:

- Collect data that reflects real scenarios the agent will face

- Clean and label data consistently

- Include edge cases the agent must handle

- Use internal knowledge bases, system logs, and task records where appropriate

High-quality data preparation sets a strong foundation before model training begins.

3. Choose the Right Training Framework

Training an AI agent can involve multiple approaches depending on its purpose:

- Supervised learning for tasks with labeled input/output pairs

- Reinforcement learning for autonomous decision-making where the agent explores environments and learns from feedback

- Fine-tuning of pre-trained models for specific domains without training from scratch

Selecting the right framework early ensures your training process is efficient and relevant.

4. Structure Training With Guardrails and Governance

Tech teams must embed controls and governance in the training loop:

- Define guardrails for acceptable actions

- Build a governance framework that tracks decision thresholds and risk factors

- Create escalation paths when agents encounter ambiguity or high-risk decisions

Good governance ensures agents behave responsibly and are aligned with organizational policies.

5. Modular Design and Breakdown of Tasks

AI agents work best when complex goals are broken into smaller, manageable subtasks. For example:

- Separate data extraction from decision logic

- Use specialized modules for intent recognition and action execution

- Combine modules with clear interfaces

Modular design improves scalability, maintainability, and testability.

6. Iterative Testing and Evaluation

Training isn’t a one-off step — it’s iterative:

- Test agents against real-world scenarios

- Evaluate performance across multiple dimensions (accuracy, relevance, safety)

- Refine training data and parameters based on feedback

Compare results against your KPIs continuously to validate that agents improve over time.

7. Deploy with Monitoring and Continuous Learning

An agent deployed without monitoring will drift or degrade:

- Track performance metrics in production

- Log decisions and outcomes for audit and analysis

- Retrain models regularly with new data

- Adjust for bias, performance variance, and shifting contexts

Monitoring lets you keep agents reliable and aligned with evolving business needs.

8. Ensure Explainability and Human Oversight

AI agents make decisions autonomously, but tech teams must retain oversight:

- Document training steps and decision logic

- Provide human-in-the-loop checkpoints for critical actions

- Enable traceability from input to action

This makes agent behavior understandable, auditable, and safer in high-stakes environments.

How Fairfield Tech Teams Can Operationalize These Techniques

Training AI agents becomes strategic when integrated into engineering processes, not treated as an isolated task. A practical workflow includes:

- Define goals and success metrics aligned to business processes

- Prepare and label high-quality, representative datasets

- Select an appropriate learning method (supervised, reinforcement, fine-tuning)

- Build governance frameworks and training guardrails

- Design modular agent components

- Iteratively test and refine agents

- Deploy with active monitoring and continuous retraining

- Implement human oversight and explainability controls

This structured approach maximizes reliability, security, and operational value.

Final Thought

Training AI agents is not a one-time activity — it is a lifecycle discipline that blends technical rigor with governance and ongoing evaluation. By focusing on clear objectives, quality data, iterative refinement, and responsible deployment, Fairfield tech teams can build agents that are effective, secure, and aligned to real business goals — not brittle demos that fail when scaled.